Creating the Azure Storage Account

In Azure Bits #1 – Up and Running, we got a skeleton in place for our web application that will allow the user to upload an image and we published our Azure Image Manipulator Web App to Azure. Our next task is taking this uploaded image and saving it into Azure Blob Storage.

The first thing we need to do is to create an Azure Storage account in the Azure Portal. Once logged into the Portal, you’ll want to click on the big green plus sign for New in the top left.

Next, you’ll want to select Data + Storage and then Storage to get to the configuration blade for your new storage account.

Here, you’ll want to enter a unique name for your Azure Storage account. Note that this name must be globally unique and must be a valid URL. You’ll also want to make sure that the physical location you select is closest to the consumers of your data as costs can increase based on the proximity of the consumer to the storage region. You can leave the rest of the information with the defaults and then click the Create button. Azure will grind away for a bit to create your storage account as you watch an animation on your home screen. There’s a much more in-depth article specifically on Azure Storage Accounts at the Azure site that you may find interesting, though don’t be alarmed that the screenshots there differ from what I have here or what you might actually encounter on the Azure Portal itself. The Azure folks have been tweaking the look of the Azure Preview Portal pretty regularly.

Eventually, the Azure Storage account will be created and you will be presented with the dashboard page for your new storage account.

Adding the Container

In Azure Blob Storage, each blob must live inside a Container. A Container is just a way to group blobs and is used as part of the URL that is created for each blob. An Azure Storage account can contain unlimited Containers and each Container can contain unlimited blobs.

So, let’s add a Container so we’ll have somewhere to store our images. In the Summary area, you want to now click on the Containers link to show your containers for this storage account and then click the white plus icon just below the Containers header.

In the Add a Container blade, enter a name for your container and select Blob and click OK.

Once your container is created, it will be displayed in the list of containers. Copy the URL that is created for your container from the URL column. Let’s go ahead and copy that into our web.config file as we’ll soon need it.

Configuration Settings

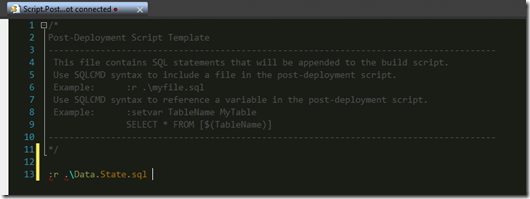

Open the Visual Studio solution we created in the last Azure Bit so we can wire up saving of our UploadedImage into our newly created Azure Storage account.

While you’ve still got that URL in your clipboard, let’s paste that into the appSettings of our web.config file as ImageRootPath. Also, go ahead and add an appSetting for your Container name as we will need that as well.

web.config

- <appSettings>

- <add key=“ImageRootPath“ value=“https://imagemanipulator.blob.core.windows.net/images“ />

- <add key=“ImagesContainer“ value=“images“ />

- </appSettings>

Since we already have web.config open, let’s go ahead and grab the connection string for our storage account and add that to our connectionStrings in web.config. In the main dashboard for your storage account, you’ll want to click the All Settings link and then select Keys so that you can see the various key settings for your storage account including the connection strings. You should be seeing something that looks roughly like the below. Note that I’ve masked some of my super secret keys in this screenshot. It’s very important that you guard your keys as they can be used to gain unfettered access to your storage accounts if they are compromised. You can always regenerate new keys by using the buttons just under the Manage keys header in the Azure Portal if you find that your keys have been compromised. Also, there are various rotation strategies you can employ to automate the exchanging of primary and secondary keys, but that is beyond the scope of this series.

Now copy the value given for Primary Connection String and add this to the connectionStrings section of web.config:

web.config

- <connectionStrings>

- <add name=“BlobStorageConnectionString“

- connectionString=“DefaultEndpointsProtocol=https;AccountName=imagemanipulator;AccountKey=XXXXXXXXXXXXX“ />

- </connectionStrings>

Next, we’ll update ImageService to grab the values we just placed in web.config and assign these to private fields in ImageService. In addition, now that we have these values, we can construct and assign the URL for our UploadedImage in the CreateUploadedImage method.

ImageService.cs

- public class ImageService : IImageService

- {

- private readonly string _imageRootPath;

- private readonly string _containerName;

- private readonly string _blobStorageConnectionString;

- public ImageService()

- {

- _imageRootPath = ConfigurationManager.AppSettings["ImageRootPath"];

- _containerName = ConfigurationManager.AppSettings["ImagesContainer"];

- _blobStorageConnectionString = ConfigurationManager.ConnectionStrings["BlobStorageConnectionString"].ConnectionString;

- }

- public async Task<UploadedImage> CreateUploadedImage(HttpPostedFileBase file)

- {

- if ((file != null) && (file.ContentLength > 0) && !string.IsNullOrEmpty(file.FileName))

- {

- byte[] fileBytes = new byte[file.ContentLength];

- await file.InputStream.ReadAsync(fileBytes, 0, Convert.ToInt32(file.ContentLength));

- return new UploadedImage

- {

- ContentType = file.ContentType,

- Data = fileBytes,

- Name = file.FileName,

- Url = string.Format(“{0}/{1}“, _imageRootPath, file.FileName)

- };

- }

- return null;

- }

- }

Wiring the Image Service to Upload

For this next section, we will need to bring in some additional packages via Nuget. To do this, right-click on the web project and select Manage NuGet Packages and then search for WindowsAzure.Storage and click Install. This will bring in all of the required Nuget packages needed for interaction with Azure Storage, so be sure to click “I Accept” when prompted.

The flow for saving our image to Azure Blob Storage is that we first need to get a reference to our Storage Account and use that to create a reference to our Container. Once we have our Container, we configure it to allow public access and we use it to get a reference to a block of memory for our blob. Lastly, we tell the block that we are about to insert an image and finally we kick off the upload of the image to our Container using the name we generated earlier. Let’s now see what that looks like in some (heavily-commented) code.

First, add the new method to the IImageService interface:

IImageService.cs

- public interface IImageService

- {

- Task<UploadedImage> CreateUploadedImage(HttpPostedFileBase file);

- Task AddImageToBlobStorageAsync(UploadedImage image);

- }

And now, implement the new method in ImageService:

ImageService.cs

- public async Task AddImageToBlobStorageAsync(UploadedImage image)

- {

- // get the container reference

- var container = GetImagesBlobContainer();

- // using the container reference, get a block blob reference and set its type

- CloudBlockBlob blockBlob = container.GetBlockBlobReference(image.Name);

- blockBlob.Properties.ContentType = image.ContentType;

- // finally, upload the image into blob storage using the block blob reference

- var fileBytes = image.Data;

- await blockBlob.UploadFromByteArrayAsync(fileBytes, 0, fileBytes.Length);

- }

- private CloudBlobContainer GetImagesBlobContainer()

- {

- // use the connection string to get the storage account

- var storageAccount = CloudStorageAccount.Parse(_blobStorageConnectionString);

- // using the storage account, create the blob client

- var blobClient = storageAccount.CreateCloudBlobClient();

- // finally, using the blob client, get a reference to our container

- var container = blobClient.GetContainerReference(_containerName);

- // if we had not created the container in the portal, this would automatically create it for us at run time

- container.CreateIfNotExists();

- // by default, blobs are private and would require your access key to download.

- // You can allow public access to the blobs by making the container public.

- container.SetPermissions(

- new BlobContainerPermissions

- {

- PublicAccess = BlobContainerPublicAccessType.Blob

- });

- return container;

- }

One thing to note about the public access we set on our Container (since I know that the PublicAccess = BlobContainerPublicAccessType.Blob might make some folks nervous). Containers are created by default with private access. We are updating our Container to public to allow read-only anonymous access of our images. We will still need our private access keys in order to delete or edit images in the Container.

The last step for the upload process is to actually call the AddImageToBlobStorageAsync method from our HomeController’s Upload action method.

HomeController.cs

- [HttpPost]

- public async Task<ActionResult> Upload(FormCollection formCollection)

- {

- if(Request != null)

- {

- HttpPostedFileBase file = Request.Files["uploadedFile"];

- var uploadedImage = await _imageService.CreateUploadedImage(file);

- await _imageService.AddImageToBlobStorageAsync(uploadedImage);

- }

- return View(“Index”);

- }

At this point, you should be able to run the application and upload an image and it should save to Azure Blob Storage and you should see it appear in your web page. You can right-click on the image and choose Inspect Element or View Properties (depending on your browser) to see that the image is actually being served from your Azure Storage account.

Debugging with Azure Storage Explorer

Now that you have the site working and saving to Azure Blob Storage, I’ll point you to one of my favorite debugging tools that I use when I am working with Azure Storage. There are several tools that exist for viewing and manipulating the contents of Azure Storage accounts, but my favorite is Azure Storage Explorer. Once you install Azure Storage Explorer, you’ll want to click on the Add Account button in the top menu. To get the values needed for the Add Storage Account dialog, you’ll want to return to the All Settings blade for your storage account in the Azure Portal. The first two settings are the ones you want for creating a new account in Azure Storage Explorer. Here’s the mapping:

Once you have the storage account configured in Azure Storage Explorer, you can view the contents of your container(s), like this:

You can double-click on any of the items and the View Blob dialog will open showing you the Properties Tab with everything you could possibly want to know about your blob item and even allows you to change most of the properties directly from the interface. If you then click the Content Tab, select Image, and then click the View button you can see your image:

Let’s Get it to the Cloud

As a final step, we want to publish all of this new Azure Image Manipulator goodness that we’ve created in these first two Azure Bits back out to our Azure website. You do this by right-clicking on the web project in Solution Explorer and choosing “Publish..”. Once Visual Studio completes the publishing process, you should be able to run your Azure website and upload an image to Azure Blob Storage just the same as when you were running against localhost earlier.

Now that we have our original image safely stored away in Azure Blob Storage, we need to give notice that our image is ready for processing. We’ll do this by placing a message in an Azure Queue.

In the next Azure Bit, I’ll walk through setting up an Azure Queue and inserting a message into the queue signaling that our image is ready for manipulation.

Did you miss the first Azure Bit? You can read Azure Bits #1 – Up and Running at the Wintellect DevCenter.

Originally published: 2015/05/22